knitr::opts_chunk$set(

echo = TRUE, # Display code chunks

eval = FALSE, # Avoid automatic execution

warning = FALSE, # Hide warnings

message = FALSE, # Hide messages

fig.width = 6, # Set default plot width

fig.height = 4, # Set default plot height

fig.align = "center", # Center align plots

comment = "" # Prevents '##' in output

)Overview

This lab notebook documents the process of identifying long non-coding RNAs (lncRNAs) from RNA-seq data. The pipeline includes data acquisition, genome alignment, transcript assembly, filtering, and classification.

Setup

Variables

# Global R options

knitr::opts_chunk$set(echo = TRUE)

# Define key paths and tool directories

DATA_DIR <- "../data/01-lncRNA"

OUTPUT_DIR <- "../output/01-lncRNA"

THREADS <- "42"

FASTQ_SOURCE <- "https://gannet.fish.washington.edu/Atumefaciens/20230519-E5_coral-fastqc-fastp-multiqc-RNAseq/P_meandrina/trimmed/"

FASTQ_SUFFIX <- "fastq.gz"

GENOME_SOURCE <- "https://owl.fish.washington.edu/halfshell/genomic-databank/Pocillopora_meandrina_HIv1.assembly.fasta"

GTF_SOURCE <- "https://raw.githubusercontent.com/urol-e5/timeseries_molecular/d5f546705e3df40558eeaa5c18b122c79d2f4453/F-Ptua/data/Pocillopora_meandrina_HIv1.genes-validated.gtf"

GFF_SOURCE <- "https://gannet.fish.washington.edu/seashell/bu-github/deep-dive-expression/F-Ptuh/data/Pocillopora_meandrina_HIv1.genes-validated.gff3"

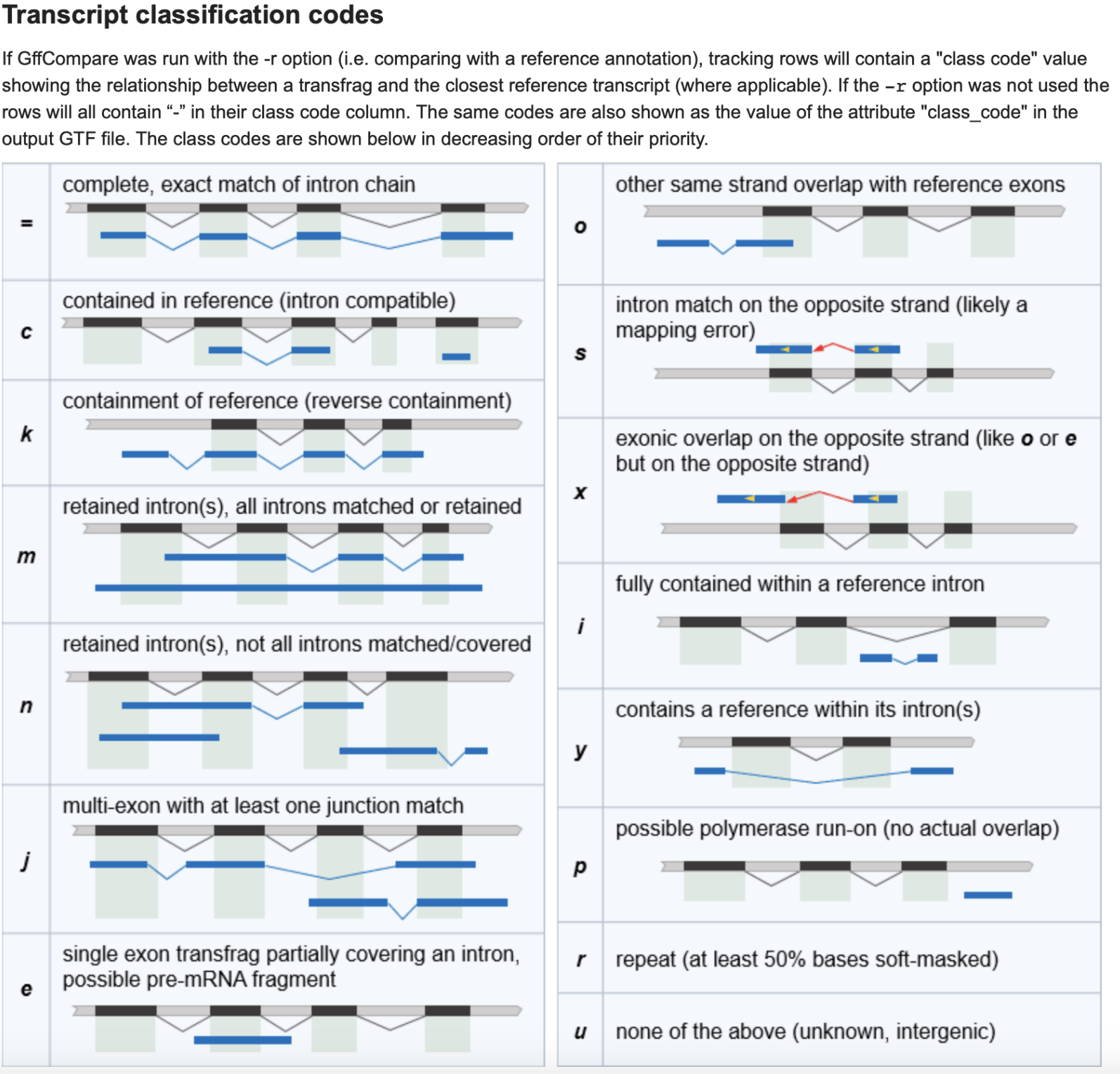

GFFPATTERN <- 'class_code "u"|class_code "x"|class_code "o"|class_code "i"'

#RAVEN

HISAT2_DIR <- "/home/shared/hisat2-2.2.1/"

SAMTOOLS_DIR <- "/home/shared/samtools-1.12/"

STRINGTIE_DIR <- "/home/shared/stringtie-2.2.1.Linux_x86_64"

GFFCOMPARE_DIR <- "/home/shared/gffcompare-0.12.6.Linux_x86_64"

BEDTOOLS_DIR <- "/home/shared/bedtools2/bin"

CPC2_DIR <- "/home/shared/CPC2_standalone-1.0.1"

CONDA_PATH <- "/opt/anaconda/anaconda3/bin/conda"

#KLONE

# HISAT2_DIR <- ""

# SAMTOOLS_DIR <- ""

# STRINGTIE_DIR <- ""

# GFFCOMPARE_DIR <- "/srlab/programs/gffcompare-0.12.6.Linux_x86_64/"

# BEDTOOLS_DIR <- ""

# CPC2_DIR <- "/srlab/programs/CPC2_standalone-1.0.1/bin/"

# CONDA_PATH <- ""

GENOME_FASTA <- file.path(DATA_DIR, "genome.fasta")

GENOME_GTF <- file.path(DATA_DIR, "genome.gtf")

GENOME_GFF <- file.path(DATA_DIR, "genome.gff")

FASTQ_DIR <- file.path(DATA_DIR, "fastq")

GENOME_INDEX <- file.path(OUTPUT_DIR, "genome.index")

# Export these as environment variables for bash chunks.

Sys.setenv(

THREADS = THREADS,

DATA_DIR = DATA_DIR,

FASTQ_SOURCE = FASTQ_SOURCE,

FASTQ_SUFFIX = FASTQ_SUFFIX,

OUTPUT_DIR = OUTPUT_DIR,

GENOME_SOURCE = GENOME_SOURCE,

GTF_SOURCE = GTF_SOURCE,

GFF_SOURCE = GFF_SOURCE,

HISAT2_DIR = HISAT2_DIR,

SAMTOOLS_DIR = SAMTOOLS_DIR,

STRINGTIE_DIR = STRINGTIE_DIR,

GFFCOMPARE_DIR = GFFCOMPARE_DIR,

BEDTOOLS_DIR = BEDTOOLS_DIR,

CPC2_DIR = CPC2_DIR,

CONDA_PATH = CONDA_PATH,

GENOME_FASTA = GENOME_FASTA,

GENOME_GTF = GENOME_GTF,

GENOME_GFF = GENOME_GFF,

FASTQ_DIR = FASTQ_DIR,

GENOME_INDEX = GENOME_INDEX

)mkdir -p "${DATA_DIR}"

mkdir -p "${OUTPUT_DIR}"Download Genome and Reads for Hisat

wget -nv -r \

--no-directories --no-parent \

-P ${FASTQ_DIR} \

-A "*${FASTQ_SUFFIX}" ${FASTQ_SOURCE}ls ${FASTQ_DIR}

curl -o "${GENOME_FASTA}" "${GENOME_SOURCE}"

curl -o "${GENOME_GTF}" "${GTF_SOURCE}"

curl -o "${GENOME_GFF}" "${GFF_SOURCE}"output_fasta=$(head -1 "${GENOME_FASTA}")

output_gff=$(head -2 "${GENOME_GFF}")

output_gtf=$(head -1 "${GENOME_GTF}")

if [[ "$output_fasta" == *html* || "$output_gff" == *html* || "$output_gtf" == *html* ]]; then

echo "FAIL - FFS you downloaded a HTML not and genome feature file!"

else

echo "$output_fasta"

echo "$output_gff"

echo "$output_gtf"

fiHISAT

"${HISAT2_DIR}hisat2_extract_exons.py" "${GENOME_GTF}" > "${OUTPUT_DIR}/exon.txt"

"${HISAT2_DIR}hisat2_extract_splice_sites.py" "${GENOME_GTF}" > "${OUTPUT_DIR}/splice_sites.txt"

"${HISAT2_DIR}hisat2-build" \

-p "${THREADS}" \

"${GENOME_FASTA}" \

"${GENOME_INDEX}" \

--exon "${OUTPUT_DIR}/exon.txt" \

--ss "${OUTPUT_DIR}/splice_sites.txt" \

2> "${OUTPUT_DIR}/hisat2-build_stats.txt"# Loop over every file ending in .fastq.gz that contains "_R2_"

for r2 in "${FASTQ_DIR}"/*_R2_*."${FASTQ_SUFFIX}"; do

# Get the basename (filename without path)

base=$(basename "$r2")

# Derive a sample name by taking everything before "_R2_"

sample="${base%%_R2_*}"

# Construct the corresponding R1 filename by replacing "_R2_" with "_R1_"

r1="${r2/_R2_/_R1_}"

# Define the output SAM file name using the sample name

output="${OUTPUT_DIR}/${sample}.sam"

# Run hisat2 with the paired-end files

"${HISAT2_DIR}hisat2" \

-x "${GENOME_INDEX}" \

-p "${THREADS}" \

-1 "$r1" \

-2 "$r2" \

-S "$output"

doneconvert SAM to BAM

for samfile in "${OUTPUT_DIR}/${sample}"*.sam; do

bamfile="${samfile%.sam}.bam"

sorted_bamfile="${samfile%.sam}.sorted.bam"

# Convert SAM to BAM

"${SAMTOOLS_DIR}samtools" view -bS -@ "${THREADS}" "$samfile" > "$bamfile"

# Sort BAM

"${SAMTOOLS_DIR}samtools" sort -@ "${THREADS}" "$bamfile" -o "$sorted_bamfile"

# Index sorted BAM

"${SAMTOOLS_DIR}samtools" index -@ "${THREADS}" "$sorted_bamfile"

doneStringTie

StringTie uses the sorted BAM files to assemble transcripts for each sample, outputting them as GTF (Gene Transfer Format) files. And then merges all individual GTF assemblies into a single merged GTF file. This step extracts transcript information and merges GTFs from all samples–an important step in creating a canonical list of lncRNAs across all samples included in the pipeline.

find "${OUTPUT_DIR}" -name "*sorted.bam" \

| xargs -n 1 basename -s .sorted.bam | xargs -I{} \

"${STRINGTIE_DIR}stringtie" \

-p "${THREADS}" \

-G "${GENOME_GFF}" \

-o "${OUTPUT_DIR}/{}.gtf" \

"${OUTPUT_DIR}/{}.sorted.bam""${STRINGTIE_DIR}stringtie" \

--merge \

-G "${GENOME_GFF}" \

-o "${OUTPUT_DIR}/stringtie_merged.gtf" \

"${OUTPUT_DIR}/"*.gtf#GFFCOMPARE

"${GFFCOMPARE_DIR}gffcompare" \

-r "${GENOME_GFF}" \

-o "${OUTPUT_DIR}/gffcompare_merged" \

"${OUTPUT_DIR}/stringtie_merged.gtf"head -1 "${OUTPUT_DIR}"/gffcompare_merged*awk '$3 == "transcript" && $1 !~ /^#/' "${OUTPUT_DIR}/gffcompare_merged.annotated.gtf" | \

grep -E "${GFFPATTERN}" | \

awk '($5 - $4 > 199) || ($4 - $5 > 199)' > "${OUTPUT_DIR}/lncRNA_candidates.gtf"Bedtools

"${BEDTOOLS_DIR}"bedtools getfasta \

-fi "${GENOME_FASTA}" \

-bed "${OUTPUT_DIR}/lncRNA_candidates.gtf" \

-fo "${OUTPUT_DIR}/lncRNA_candidates.fasta" \

-name -split#CPC2

eval "$(/opt/anaconda/anaconda3/bin/conda shell.bash hook)"

python "${CPC2_DIR}"CPC2.py \

-i "${OUTPUT_DIR}/lncRNA_candidates.fasta" \

-o "${OUTPUT_DIR}/CPC2"Filter

awk '$8 == "noncoding" {print $1}' "${OUTPUT_DIR}/CPC2.txt" > "${OUTPUT_DIR}/noncoding_transcripts_ids.txt"Subsetting fasta

"${SAMTOOLS_DIR}samtools" faidx "${OUTPUT_DIR}/lncRNA_candidates.fasta" \

-r "${OUTPUT_DIR}/noncoding_transcripts_ids.txt" \

> "${OUTPUT_DIR}/lncRNA.fasta"head -2 "${OUTPUT_DIR}/lncRNA.fasta"# Define input and output file paths using the OUTPUT_DIR variable

input="${OUTPUT_DIR}/noncoding_transcripts_ids.txt"

output="${OUTPUT_DIR}/lncRNA.bed"

# Process each line of the input file

while IFS= read -r line; do

# Remove "transcript::" from the line

line="${line//transcript::/}"

# Split the line by ':' to get the chromosome and position string

IFS=':' read -r chromosome pos <<< "$line"

# Split the position string by '-' to separate start and end positions

IFS='-' read -r start end <<< "$pos"

# Convert the start position to 0-based by subtracting 1

start=$((start - 1))

# Write the chromosome, updated start, and end positions to the output file (tab-separated)

printf "%s\t%s\t%s\n" "$chromosome" "$start" "$end"

done < "$input" > "$output"head -1 "${OUTPUT_DIR}/lncRNA.gtf"awk 'BEGIN{OFS="\t"; count=1} {printf "%s\t.\tlncRNA\t%d\t%d\t.\t+\t.\tgene_id \"lncRNA_%03d\";\n", $1, $2, $3, count++;}' "${OUTPUT_DIR}/lncRNA.bed" \

> "${OUTPUT_DIR}/lncRNA.gtf"Summary Table

tf_file="${OUTPUT_DIR}/lncRNA.gtf"

awk '

BEGIN {

total_entries = 0;

min_length = 1e9;

max_length = 0;

sum_length = 0;

}

# Skip comment lines

/^#/ { next }

{

if (NF < 9) next;

total_entries++;

start = $4;

end = $5;

gene_length = end - start + 1;

if (gene_length < min_length) min_length = gene_length;

if (gene_length > max_length) max_length = gene_length;

sum_length += gene_length;

feature[$3]++;

chrom[$1]++;

# Use two-argument match() and then extract the gene_id manually.

if (match($9, /gene_id "[^"]+"/)) {

gene_str = substr($9, RSTART, RLENGTH);

# Remove the "gene_id " prefix and the quotes.

gsub(/gene_id "/, "", gene_str);

gsub(/"/, "", gene_str);

genes[gene_str] = 1;

}

}

END {

avg_length = (total_entries > 0) ? sum_length / total_entries : 0;

unique_gene_count = 0;

for (g in genes)

unique_gene_count++;

print "Basic GTF File Statistics:";

print "--------------------------";

print "Total entries: " total_entries;

print "Unique genes: " unique_gene_count;

print "Min gene length: " min_length;

print "Max gene length: " max_length;

printf("Average gene length: %.2f\n", avg_length);

print "\nFeature counts:";

for (f in feature) {

print " " f ": " feature[f];

}

print "\nChromosome counts:";

for (c in chrom) {

print " " c ": " chrom[c];

}

}

' "$tf_file"